Flaky Tests: The Tester's F Word

Jared Yarn

Reading time: about 7 min

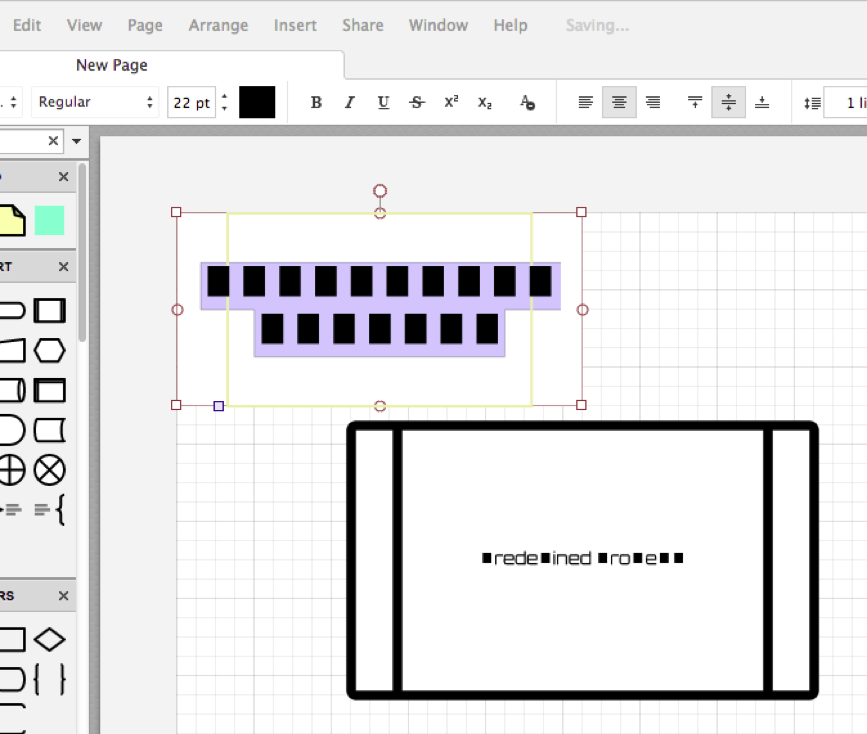

Randomness in Code Under Test

Tests should be idempotent—the same input produces the same output. All tests follow this simple principle. They are set up to enter a particular set of inputs, which are then compared to an expected output. When the expected output does not match the actual output, the test fails. One of the first flaky tests we ran into at Lucid was a result of a violation of this idempotence rule. This particular bug caused all the integration tests for our editor page to become flaky. Every single one of our integration tests for the editor creates a document. Every document is required to have at least one page. Every page has at least one panel. This bug manifested itself in any test for the editor and would virtually never reproduce when someone was investigating and debugging the test. Since all our unique IDs are created randomly, it was virtually impossible to figure out why all of our editor tests had become flaky. When our panels were created, they were assigned a unique ID. It turned out that if the ID contained a ~ character, it would cause the document to enter an infinite loop and never load—absolutely terrible. The core problem we had in debugging and understanding the ~ bug was that the code under test randomly generated the IDs. We had no way of tracking the differences between various runs of the same test because the test code being run was exactly the same. We discovered that the fix was to add an option for additional information that could be turned on during testing, specifically around anything that the code under test might do differently between test runs. This allows us to turn on extra logging, run the same test hundreds or thousands of times (if necessary), and then debug based on the logs.Race Conditions

The next time we were bit bad by the flaky tests bug was in the summer of 2015. Fonts are a major part of our product, both in Lucidchart and Lucidpress. In order to render fonts in the browser, we need to download all the glyphs. In the summer of 2015, we made fonts load much faster by moving all of them to a CDN and bundling font faces, so the browser only needed to make one request for all the faces of a font instead of an individual request for all the font faces. Naturally, this meant a major refactor to our front end JavaScript and how it gets the fonts. In our integration tests, we have a series of tests that perform a particular set of actions and then take a screenshot for comparison. This is particularly important for testing rendering on the main canvas of our editor. For some reason, all of these image comparison tests became flaky after one commit. It was only happening about one in every 100 tests, but there was no way to identify which test would break next. In fact, this one happened so rarely that it fell under our threshold for flaky tests and we didn’t even start looking at it at first. But then the user tickets started rolling in about text issues.

Parallelization Disaster

Our most recent adventure in flaky tests came from trying to parallelize our tests so that instead of taking several hours to build, they could be built in minutes. This was not a bug introduced in code, but instead flaky tests were introduced by our testing framework. There were two major issues, both dealing with shared resources, that caused flakiness problems as soon as we ran multiple tests at the same time. The first issue was a shared Google account used in some of our tests. In our integration tests, we used these Gmail accounts to test both the emails being sent to users and our integrations with Google Drive. Overall, we had about 15 tests that logged into the same Google account. When the tests were run sequentially, it was not a big deal, as each test logged out when it finished. When we moved to parallelization, there was no telling how many browsers at the same time were trying to log into the same account. Sometime Google sent us to a warning page and sometimes it randomly logged out of a different session because we had too many simultaneous sessions open. To resolve this problem, we started using a testing service for testing mailings (such as Mailinator) and using multiple Gmail accounts on a single domain for testing Google integrations. Parallelization: The paths may cross! (Source)

The second major issue was our clipboard tests. We were one of the first web applications to handle copy and paste via JavaScript. To do this, we got access to the system clipboard and responded to standard clipboard events. Because we were using a system resource, the tests could not run on the same system at the same time without implementing some sort of locking on the resource. For example, one test would perform a copy event and copy the word “Test,” and then another test would perform a paste event and expect the word “Block” to be pasted—but “Test” would be pasted instead. The only way to parallelize these is to run them on separate machines at the same time. We let SBT, our Scala build tool, handle the parallelization by tagging all the clipboard tests and then configuring SBT to only run one of those tests at a time.

Parallelization: The paths may cross! (Source)

The second major issue was our clipboard tests. We were one of the first web applications to handle copy and paste via JavaScript. To do this, we got access to the system clipboard and responded to standard clipboard events. Because we were using a system resource, the tests could not run on the same system at the same time without implementing some sort of locking on the resource. For example, one test would perform a copy event and copy the word “Test,” and then another test would perform a paste event and expect the word “Block” to be pasted—but “Test” would be pasted instead. The only way to parallelize these is to run them on separate machines at the same time. We let SBT, our Scala build tool, handle the parallelization by tagging all the clipboard tests and then configuring SBT to only run one of those tests at a time.

Conclusion

Flaky tests ruin testing suites. They are some of the most painful bugs to debug and destroy an organization's confidence in a testing suite. Over the years, we have learned some lessons to help prevent adding flaky tests to a testing suite.- Have optional detailed logging for code under test, especially for any randoms it may use.

- Write functional tests for anything that might be a race condition with a mock timer.

- Don’t use shared resources in different test cases unless they are locked.

About Lucid

Lucid Software is the leader in visual collaboration and work acceleration, helping teams see and build the future by turning ideas into reality. Its products include the Lucid Visual Collaboration Suite (Lucidchart and Lucidspark) and airfocus. The Lucid Visual Collaboration Suite, combined with powerful accelerators for business agility, cloud, and process transformation, empowers organizations to streamline work, foster alignment, and drive business transformation at scale. airfocus, an AI-powered product management and roadmapping platform, extends these capabilities by helping teams prioritize work, define product strategy, and align execution with business goals. The most used work acceleration platform by the Fortune 500, Lucid's solutions are trusted by more than 100 million users across enterprises worldwide, including Google, GE, and NBC Universal. Lucid partners with leaders such as Google, Atlassian, and Microsoft, and has received numerous awards for its products, growth, and workplace culture.