If it’s not a number, what is it? Demystifying NaN for the working programmer.

James Hart

Reading time: about 15 min

Topics:

There are few things that can trip up even experienced programmers more easily than floating-point arithmetic. New programmers often don't even realize they are using floating-point numbers, or know what floating-point numbers are. In modern programming, non-integer numbers often just are floating-point numbers. A black box that "just works"—until it doesn't.

The problem is that floating-point numbers look and act almost like the numbers1 we all learned about in school. The word "almost" hides an awful lot of complexity, though[1]; floating-point numbers cause an endless stream of strange behavior2, odd edge cases, and general problems. There are entire textbooks and university-level classes on how to get useful results out of floating-points numbers.

Easily the strangest thing about floating-point numbers is the floating-point value "NaN". Short for "Not a Number", even its name is a paradox. Only floating-point values can be NaN, meaning that from a type-system point of view, only numbers can be "not a number". NaN's actual behavior is even stranger, though. The most spectacular bit of weirdness is that NaN is not equal to itself. For instance, in JavaScript you can create this amazing snippet:

> var a = NaN

undefined

> a === a

false

> a !== a

trueThat's just the beginning of NaN's misbehavior. Numerical comparisons between NaN and other quantities are automatically false. This means, for instance, that NaN < 5 and NaN >= 5 are both false. The only reliable way to test for NaN is to use a language-dependent built-in function; the expression a === NaN is always false.

Worse, NaNs can be a very serious problem because they are infectious. With a few exceptions, numerical calculations involving NaN produce more NaNs, meaning that a single NaN in the wrong place can turn a large set of useful numbers into useless NaNs very quickly.

It’s no surprise that programmers who stumble over NaN without warning are confused. Even experienced programmers might think its existence a mistake or its behavior buggy. The truth is more complicated. Within contexts where floating-point numbers are the "best tool for the job", NaN is a terrible hack, but it is also the best option we have found. In those contexts, we are not likely to get rid of NaN any time soon.

The skeptics do have a point though, because NaN is a hack. The compromises that created modern floating-point math are very real and often still relevant, but don't always apply. Despite that, many modern programming languages default to treating all non-integer arithmetic as floating-point arithmetic. This can introduce many strange bugs and surprising behaviors to unprepared programmers. NaN is hardly the only example of this (the signed infinities are also oddly behaved), but it is certainly the most spectacular one.

To better understand NaN, let’s consider why NaN exists at all. We’ll also talk about some of its strange behavior. With this information, developers can understand floating-point arithmetic well enough to tell when they do and don't actually need it. We also describe alternatives to floating-point math that might work better in contexts where performance is less important.

- Technically they act most like the real numbers. The real numbers are what most people learn about in their elementary education, and are often just called numbers.

- Floating-point numbers technically violate most of the rules of basic algebra. Since the mistakes are usually small, we most often can just carry on regardless.

A brief history of NaN

Although NaN itself technically goes back only to the IEEE 754 floating-point standard first published in 1985, the whole story includes the entire history of floating-point arithmetic. In turn, floating-point arithmetic goes back to the very beginning of electronic computing[2].

This basic problem is central to electronic computers' very existence. The first uses for computers were to compute, that is, to do arithmetic faster and more reliably than humans could. This meant that computers had to represent numbers somehow. Representing integers was and is relatively simple3, but once you needed fractions and irrational numbers, things became complicated.

Put simply, you can't digitally represent every useful non-integer exactly; this would take infinite memory. This is true for even a limited range such as all numbers between 0.0 and 1.0. There are two solutions to this problem: put a hard limit on which numbers you can represent, or allow memory usage to grow without bound at runtime. Even on modern machines, the "grow without bound" approach has problems; on early computers it was impossibly expensive. The solution that was actually adopted was to represent a wide enough range of rational numbers, packed closely enough together, that you could get "close enough for practical purposes" on most calculations. Thus floating-point numbers and floating-point arithmetic were born.

The early implementations of floating-point numbers ran into a surprising number of problems[3]. It turned out that floating-point math is like date calculations, internationalization, or encryption: it seems simple at first, but is actually full of difficult edge cases and strange bugs. It didn't help that different hardware often used different floating-point binary representations, which meant that the same code had different results (or bugs) when run on different machines.

To solve these problems, specialists came together to create what became the IEEE 754 floating-point standard. This standard distilled decades of experience into a design that was meant, as much as possible, to "just work". This standard has since been so widely adopted that for many working programmers, non-integer computer arithmetic simply is IEEE 754's version of floating-point math.

The IEEE 754 standard also introduced NaN and all of the strange behavior we discussed in the introduction. It had some precedents in previous floating-point implementations, but this is where it became permanent. Far from being an accident, NaN was created very deliberately with an eye to the future. The real question is, why? Why did all of this experience and work result in such a strange thing?

- Computers and integers also have some surprises, but those are minor compared to what floating-point numbers get up to. Mostly they boil down to different flavors of integer overflow.

The Why of NaN

We know that floating-point numbers go all the way back to the dawn of computers[2]. That means they were designed for (by our standards) extremely slow and memory-constrained machines. In fact, many modern problems such as weather prediction, machine learning, and digital animation, would still benefit from faster processing and more memory; in these domains, our machines are still time- and memory-constrained.

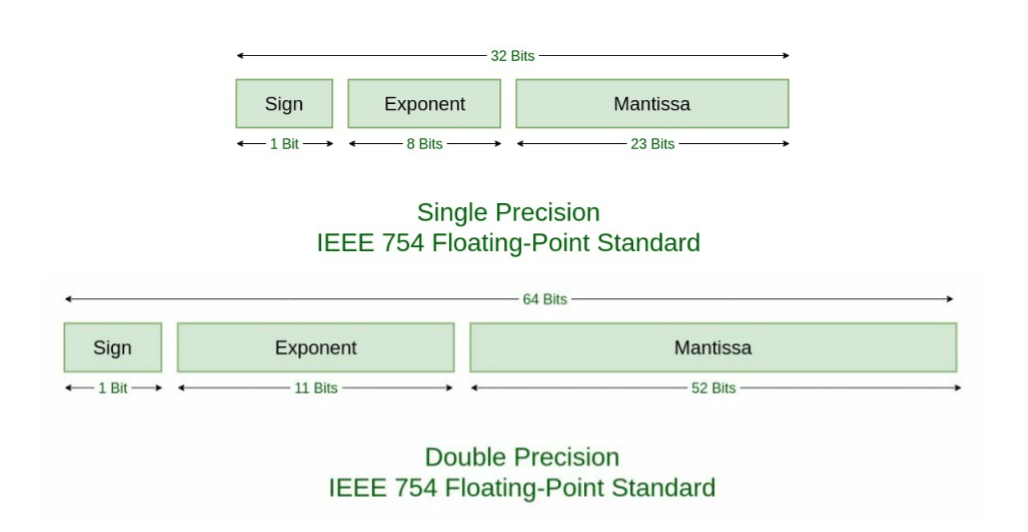

This has two huge effects on floating-point numbers. The first is that floating-point numbers have a fixed binary width. It is hard to exaggerate the performance gains from having a fixed binary width per number; it affects the entire processing pipeline. From hardware acceleration to efficient array access to parallelization to predictive processing, a fixed binary size makes everything faster.

The second effect is more subtle: the floating-point standards prioritize using the available bits to represent more numbers. More specifically, the standards use the available bits to increase the available precision and the available range in the most uniform way possible. This decision improves performance because it greatly simplifies and streamlines the logic needed to perform operations and standardize rounding. It also improves portability, because it means that floating-point numbers behave the "same" across most of the available range, and so applications with very different requirements will still "just work".

These two choices are simple, reasonable, and impressively successful. They have some unavoidable side effects though. Once you've made these decisions, there isn't much room left over in the binary format to represent non-numbers.

This statement might seem very strange if your primary exposure to math has been arithmetic. What kind of math problem has answers that aren't numbers? "Numbers in, numbers out" is the way math generally behaves, so it's easy to miss that it's not the way it always behaves.

Probably the most familiar example of "numbers in, not actually numbers out", is division by zero. The mathematical expression a/b is defined to be the number c such that c×b=a . As long as b is non-zero, this works; c exists and is unique and thus the expression a/b is a number. If a is non-zero and b is zero, then c does not exist and there is no number that answers the implied question. If a and b are both zero, we get a different failure: every number c is a valid answer, and so the answer is technically the set of all numbers, with no way to choose between them. Just with division, we have found two different possible non-numeric answers to the question "What is a/b?".

A slightly less common example happens with the square root function, written as √a. The square root of a non-negative number a is defined as the unique non-negative number c such that c×c=a. As long as you feed the square root only non-negative real numbers, c is a perfectly good real number. If you somehow try to take the square root of a negative number, you have a problem: there is no real number c such that c×c < 0, and so once again you have a non-numeric answer (i.e. that there is no answer) to a numeric question.

It is tempting to suggest that the non-numeric values 1/0 and √-1 are the same non-number because they are both "empty": there are no real numbers that answer the implied question. This does not work because there are extensions to the real numbers where those questions have answers. For 1/0, you can use the extended real line to define it as positive infinity. The question "What is the square root of -1?" has two answers in the complex plane (i and -i). If you find it useful to use one or both of these extensions, the two different non-numbers are not equal to each other. In any case, neither of these values are equal to 0/0, which, again, is technically the set of all real numbers.

These are just three examples of non-numeric results you can get from numeric questions. If you continue to more exotic cases (such as the arcsin of numbers larger than 1 or the logarithm of negative numbers) you can get even more elaborate non-numeric answers. The upshot is that, given the design constraints discussed above, floating-point numbers just don't have the room to represent these edge cases.

And this is why we have NaN. Math, it turns out, is more complex than just numbers. On the other hand, floating-point numbers are highly optimized to just be numbers. When these two facts collide, something has to give. The floating-point standard "gives" by using some of the left-over bit patterns (ones that don't make sense in the standard encoding) to define three non-numeric values: the two signed infinities, and NaN4. The signed infinities are almost numbers; after all, they are sometimes used as part of the extended real number line, which means that they at least have clear identities. NaN, on the other hand, is defined by what it isn't, rather than what it is.

This explains NaN's many odd behaviors. There's no reason to believe that two different NaN's represent the same "non-number", and so NaN !== NaN. In turn, this leads to the situation where you can get a !== a. For the sake of efficiency, floating-point comparisons are performed by value, rather than by reference. Adding extra logic around every floating-point comparison to compare by reference just for this case isn't worth the cost. After all, there's no practical use for the comparison5 beyond pranking the unwary and posting memes to the internet. So we let a !== a in this case, and reap a multitude of performance and portability benefits in all other cases.

Similar logic leads to all other numeric comparisons with NaN being false; we have to pick a value for the comparisons, and it's more trouble than it's worth to expand the type system for booleans, at least in general. We deliberately lose information for the sake of efficiency.

And that’s why we have NaN, in all its strangeness. Starting from a demand for efficient and "good enough" representations of real numbers, we end up with this very odd "other" category. It is not going away, though; as long as we have computations that are seriously time and memory constrained, we are going to need it. Working around NaN, when it pops up, is simply part of a developer's job.

- Technically there are multiple bit patterns that represent NaN, and in theory you could distinguish between them. In practice, nobody even tries, probably because nobody has come up with a usable encoding. All NaN's are treated equally and might as well be a single value.

- The only practical thing programmers ever actually use

x !== xfor is to test if x is NaN, which means they already know what they are about.

Alternatives to NaN

Programmers will be using NaN for the foreseeable future, but the key is knowing when to use it. Programming languages in general, and JavaScript in particular, encourage the use of floating-point numbers (and therefore potentially NaN) even when they are not the best tool for the job. This is unfortunate, because floating-point numbers are not actually the same as "all computer numbers," and should only be used when needed.

So what can be used besides floating-point? There are many options that are already supported in existing programming languages or environments. As usual, they all have trade-offs (the basic logic from the history of floating-point numbers still applies today), so it is up to the implementer to decide what the best solution is.

Let’s consider some options:

- Have the program abort/throw an exception when a bad operation is performed.

- Pros:

- Bugs that produce NaN are found early

- This approach can be memory and time efficient (e.g. the computer can use floating-point efficiently until an error occurs)

- Cons:

- In many contexts, NaN's should not be fatal, as they can be contained or interpreted reasonably well

- Pros:

- Use complex numbers directly, as supported by Fortran, Matlab, Python, or Julia

- Pros:

- You lose less information when complex numbers model the problem well

- More readable and performant than rolling your own complex numbers

- Cons:

- Doubles the amount of memory needed

- Still uses floating-point for real and imaginary parts of complex numbers, and so doesn't solve many common causes of NaN (

0/0,0×infinity, etc) - Often requires understanding complex analysis

- Pros:

- Use a high-level computer algebra system

- Pros:

- Math is performed symbolically rather than numerically, so common irrational numbers and non-numbers (complex numbers, sets of numbers) are stored and presented without approximation or compression

- Cons:

- Much slower and much less memory-efficient than floating-point

- Computer algebra systems sometimes silently fall back on floating-point, reintroducing all the usual problems with it

- The most powerful symbolic algebra systems are proprietary and expensive

- Much higher barrier to entry than most programming languages

- Pros:

- Use properly scaled integers rather than floating-point

- Pros:

- Faster than floating-point

- Can be controlled more precisely than floating-point

- Should always be the approach with currency calculations

- Cons:

- Not actually practical for many numerical problems

- More complex to program and maintain

- Silent integer overflow becomes a problem again

- Pros:

- Big integers and big decimals

- Pros:

- More detailed control over behavior

- Potentially unbounded precision and range

- Cons:

- Much slower

- Unbounded precision and range can blow up unexpectedly

- Fewer languages support them, and existing implementations have wildly different interfaces

- Pros:

- Rationals, i.e. representing fractions exactly as pairs of integers

- Pros:

- No loss of precision for precisely defined rational numbers

- Cons:

- Very poor language support (i.e. you need to use either Julia or Python, or roll your own)

- Any weaknesses inherited from integer support (i.e. integer overflow with standard integers, or efficiency losses with bigints)

- Don't help with irrational numbers

- Pros:

Conclusions

In summary, NaN's are exactly what their name says: they are answers to mathematical questions that aren't numbers, even though for performance reasons we try to squeeze them into a number-shaped hole. Their odd behavior comes from the fact that there are so many types of non-numeric answers, and we just don't have room in standard floating-point representations to, well, represent them.

NaN's are not going to go away, or change behavior, as long as we need floating-point numbers. Floating-point numbers are essential for high-intensity computing. However, they are definitely overused, and it benefits developers to know what alternatives are available to make the best choice for their use case.

References

[2] http://homepages.cs.ncl.ac.uk/brian.randell/Papers-Articles/398.pdf

[3] https://people.eecs.berkeley.edu/~wkahan/ieee754status/754story.html

About Lucid

Lucid Software is the leader in visual collaboration and work acceleration, helping teams see and build the future by turning ideas into reality. Its products include the Lucid Visual Collaboration Suite (Lucidchart and Lucidspark) and airfocus. The Lucid Visual Collaboration Suite, combined with powerful accelerators for business agility, cloud, and process transformation, empowers organizations to streamline work, foster alignment, and drive business transformation at scale. airfocus, an AI-powered product management and roadmapping platform, extends these capabilities by helping teams prioritize work, define product strategy, and align execution with business goals. The most used work acceleration platform by the Fortune 500, Lucid's solutions are trusted by more than 100 million users across enterprises worldwide, including Google, GE, and NBC Universal. Lucid partners with leaders such as Google, Atlassian, and Microsoft, and has received numerous awards for its products, growth, and workplace culture.